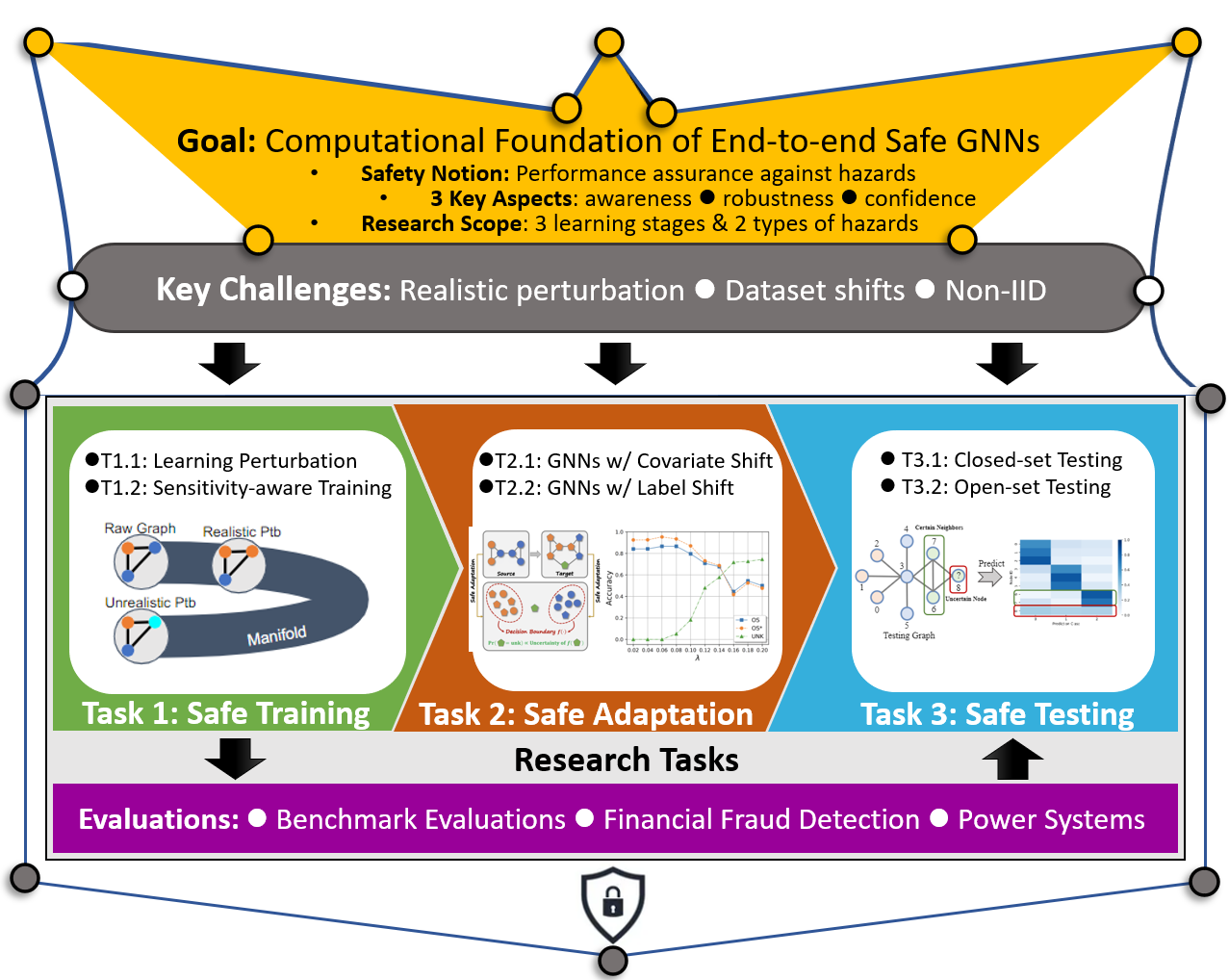

NetSafe: Towards a Computational Foundation of Safe Graph Neural Networks

Overview

|

Graph neural networks (GNNs for short) represent a family of deep learning methods designed for network and graph data. The existing literature of GNNs has offered rich theories, algorithms and systems for designing GNNs architectures, training GNNs with strong empirical performance, analyzing GNNs in terms of the generalization performance and expressive power. As the application landscape of GNNs continues to broaden and deepen, the fundamental safety issues of GNNs are less well studied and several important questions largely remain open. To name a few, how can one rigorously and quantitatively measure the safety of a GNNs model? How safe are the existing GNNs models in the presence of hazards? How can one make the GNNs safer during the training, adaptation and testing stages? What are the fundamental limits and costs for enforcing the safety of GNNs? This project investigates the end-to-end safety of graph neural networks, with a systematic effort to examine the safety issues in the entire life cycle of GNNs, taking into consideration various types of dataset shift and realistic external perturbations. The project consists of three integral research thrusts, including (Thrust 1) safe graph neural networks training, (Thrust 2) safe graph neural networks adaptation, and (Thrust 3) safe graph neural networks testing. Contact: Hanghang Tong |